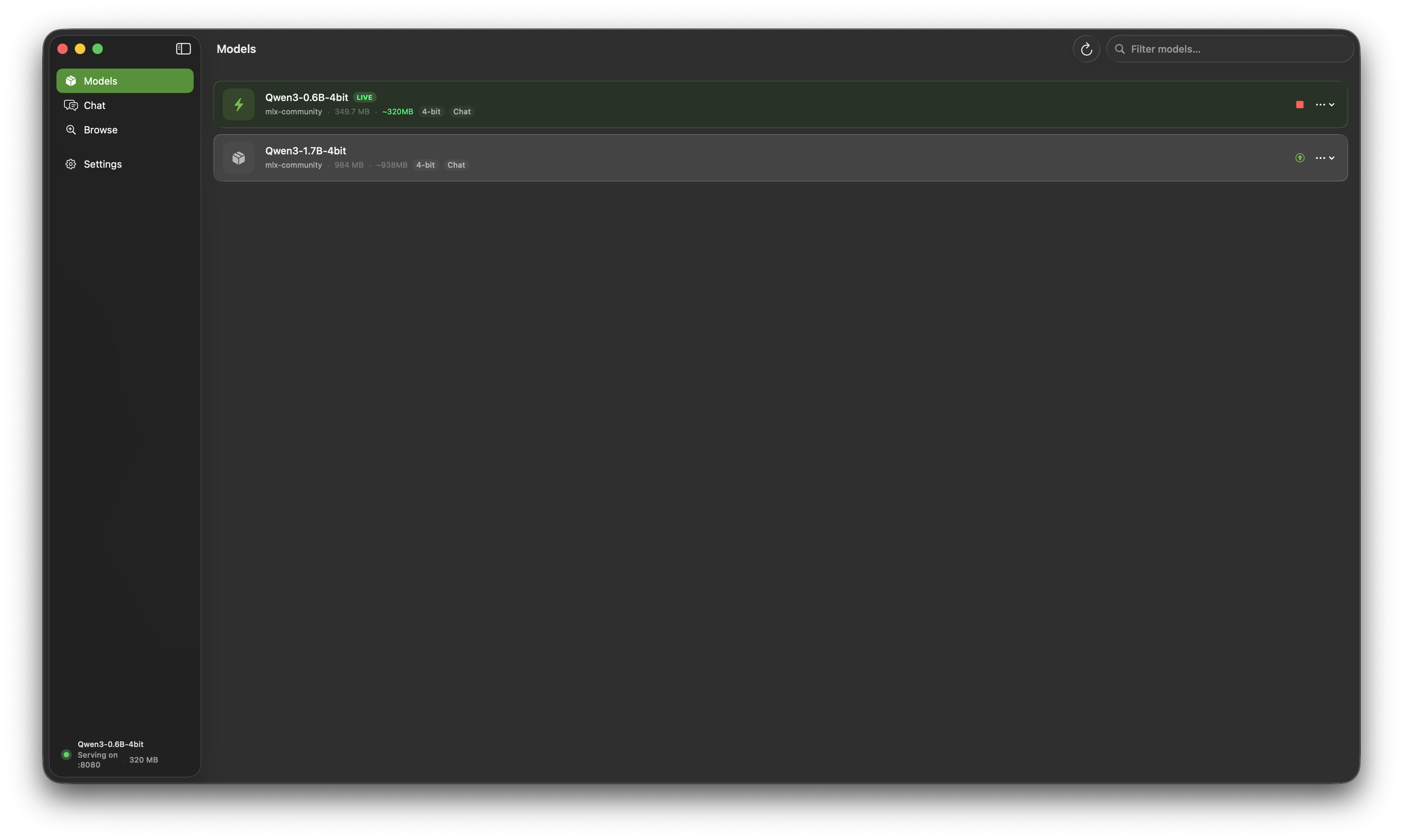

Models

Download, manage, and serve language models locally on your Mac — powered by Apple MLX. No Python. No cloud. No hassle.

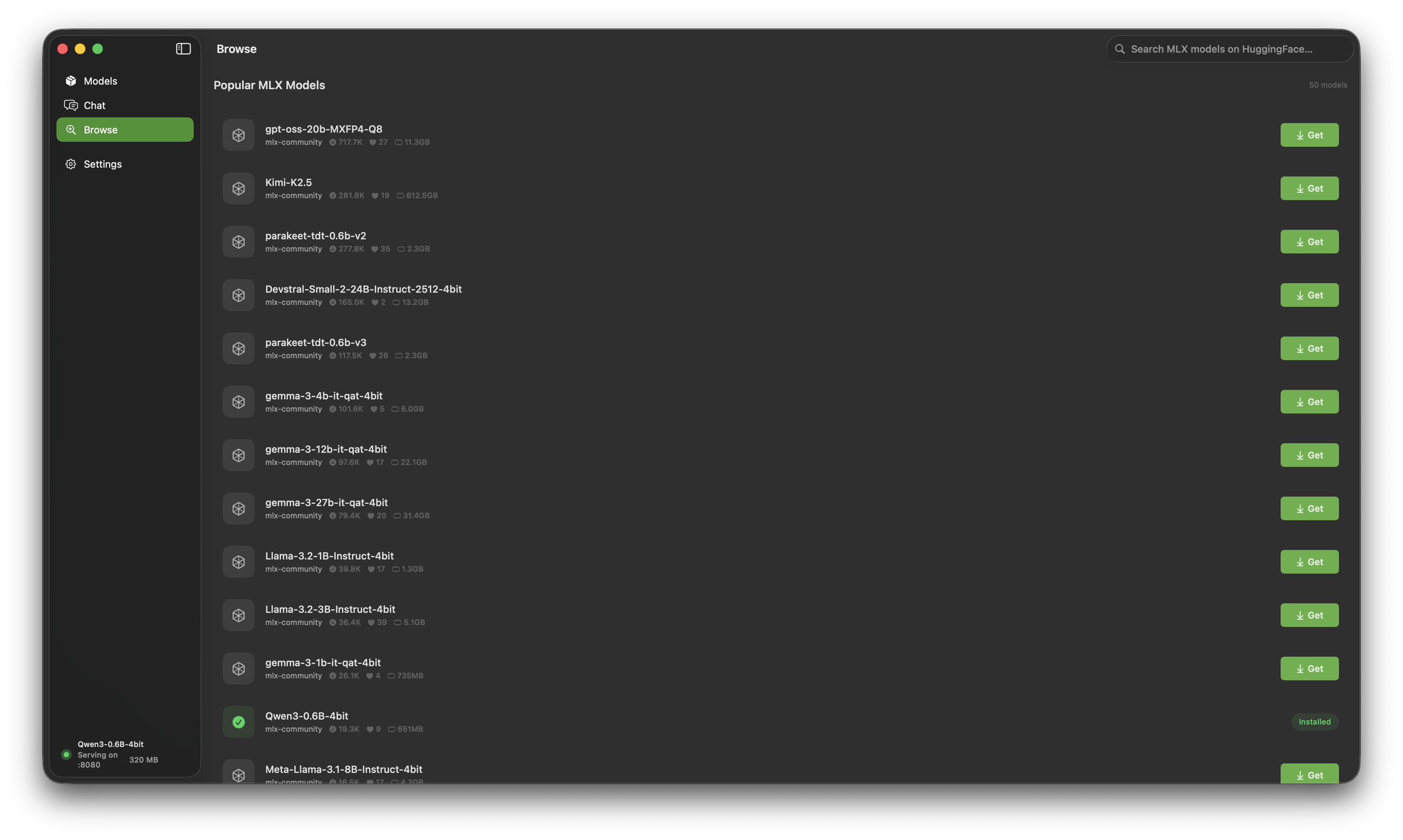

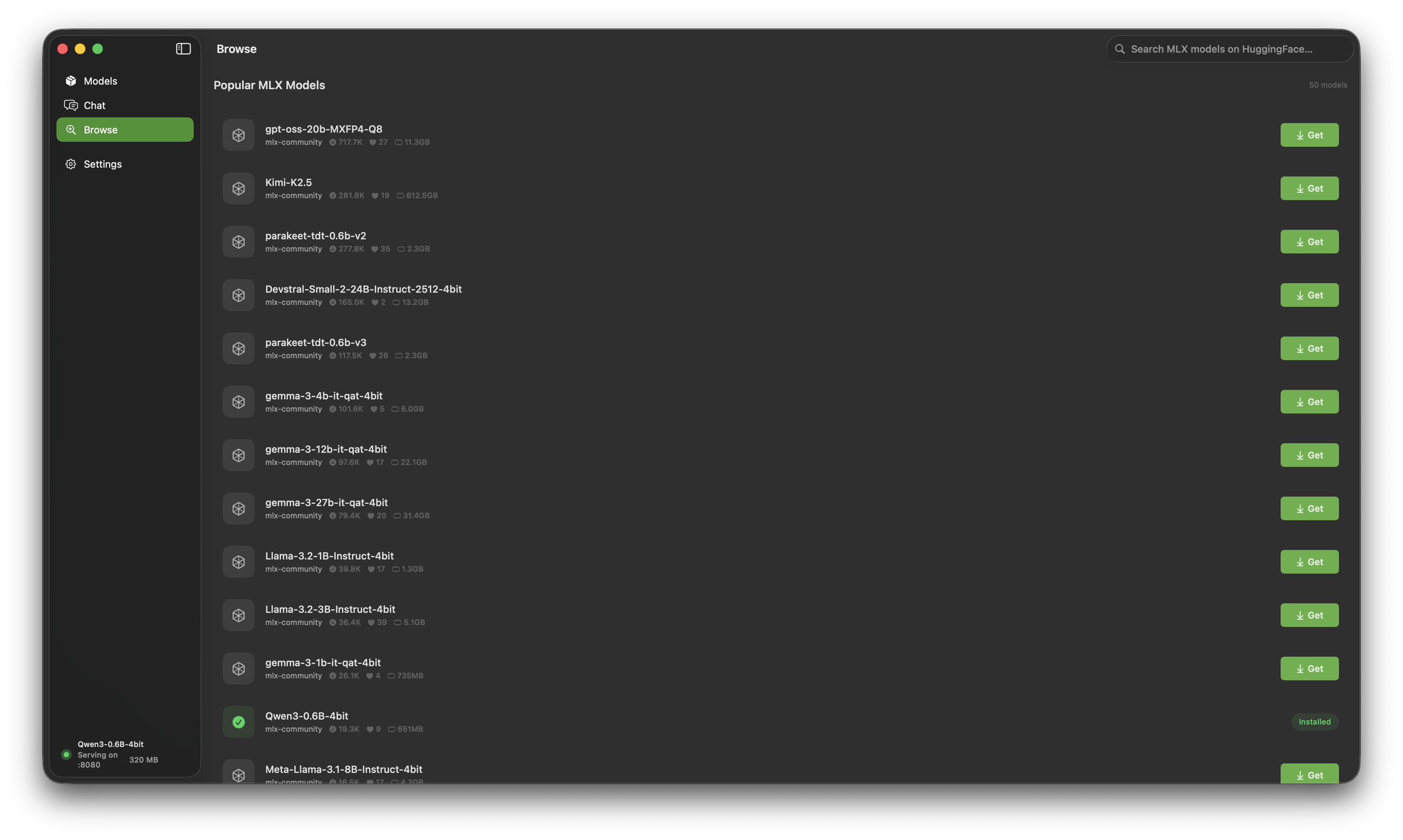

Search and download MLX-optimized models directly from HuggingFace. One click to install.

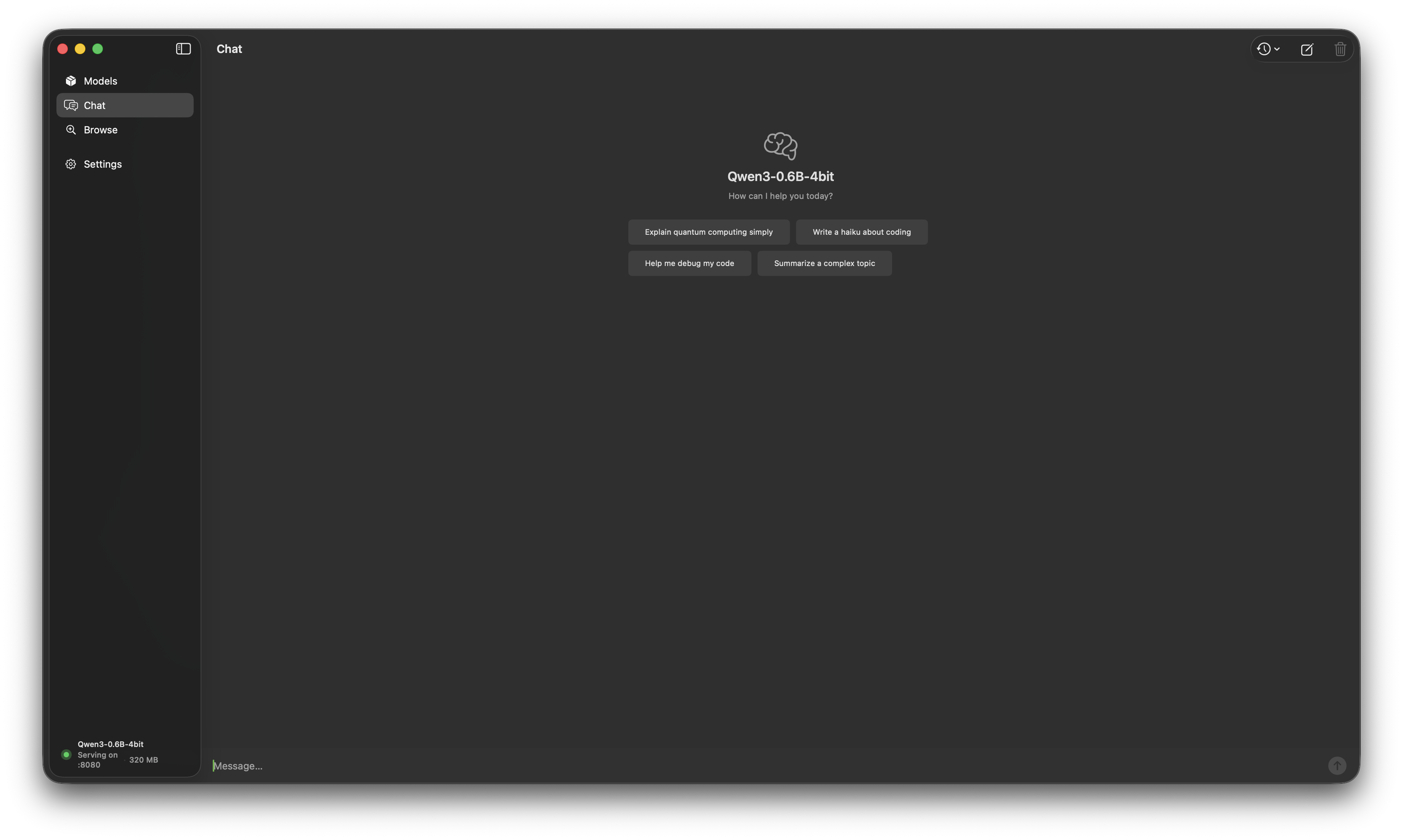

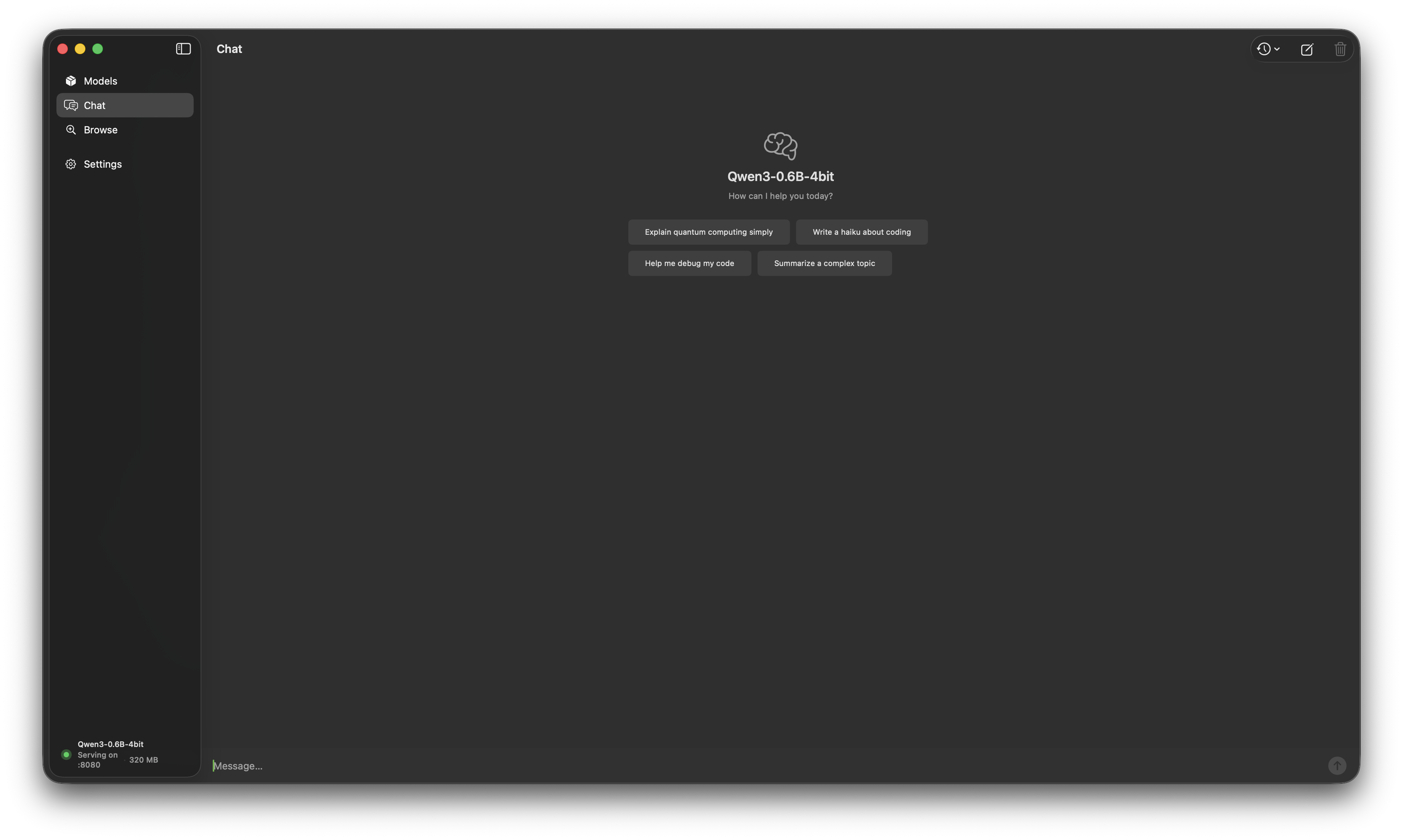

Chat with your models in a native macOS interface. Conversation history, configurable prompts, and streaming responses.

Built-in HTTP server with OpenAI-compatible endpoints. Drop-in replacement for any app that supports the OpenAI API.

Powered by Apple MLX framework. Runs entirely on your GPU with zero Python dependencies.

See installed models, GPU memory usage, and model sizes at a glance. Load and unload with one click.

Everything runs on your machine. No data leaves your Mac. No accounts, no telemetry, no cloud.